Complexity and Uncertainty

Finding a Balance Using First Order Logic and Markov Random Fields

There is a huge need in computer science, specifically in developing technologies of artificial intelligence and machine learning for representing knowledge as well as making predictions on the world/for future.

There are two primary approaches to solving this problem: There is the logical mindset where we approach this problem through a logical point of view: something is true/not true and we can use this to make general inferences about the world. Then, there is the probabilistic point of view where we can assign certain events probabilities and then based off of given probabilities, we can make probabilistic predictions. Most machine learning algorithms fall in the latter category. There are pros and cons for both sides.

Logical Approach

The logical approach utilizes something called First Order Logic, which I have written about briefly in a previous post. The key idea is that you have symbols, which include constants (nouns, if you will), variables representing constants, predicates (true or false relations between two constants/variables), and functions, which return a constant given some constant/variable. Symbols grouped together with quantifiers (existential and universal) create functions. Sets of functions are knowledge bases. Knowledge bases represent some sort of truth for worlds or models where Knowledge bases are grounded (assigned constants for variables) and some sort of truth.

It is difficult to find Knowledge bases that are both interesting/have useful information and also satisfy all possible worlds (so the conditions still hold in all worlds). This means that knowledge bases can only represent things that are 100% true or 100% false. Nothing in between. This dichotomy is not always clear for our world.

The good thing about first order logic is that it is able to make generalizations about the world. That is, you are able to infer statements that given your knowledge base, you are able to include in your knowledge base. For example, if you are given all the facts about math, you can infer theorems. There are other statements (completeness and soundness) that suggest that if a statement is true, it must be inferable and if a statement can be inferred, it must be true (respectively). Furthermore, First Order Logic deals with complexity well because it is just a large collection of facts.

Probabilistic Approach

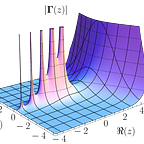

These are Markov Random Fields, now known as Markov Networks, which I admittedly know less about. The key idea is that you have some structure and have probabilities associated with values of that structure. See the image below.

Essentially, it is an undirected graph where nodes represent some event. You then have a function that returns some value (not necessarily between 0, 1) that indicates how certain you are that a daisy chain event will occur.

The trouble with Markov Random Fields is that it can only make statements about the variables within the model. So no general statements like First Order Logic. Second, there is no knowledge or understanding by the algorithm of the actual structure of the Markov Random Field. It doesn’t actually understand what is going on.

On the flip side, Markov Networks are able to deal well with uncertainty and able to make probabilistic predictions, which is similar to the real world.

Finding a Balance

Enter Markov Logic Networks: the confluence of the two. Assigning probabilities to each connection within a First Order Logic knowledge base, Markov Logic Networks allow for both uncertainty and complexity.

Applications

I will write more about Markov Logic Networks in a future piece, but consider some applications:

- Deep Learning, but with built in constraints: what if your model already knew that one person cannot exist in one frame more than once (computer vision) or that solid objects cannot intersect

- Siri was actually one of the most successful applications of Markov Logic Networks